It has been a wildly busy week in AI information due to OpenAI, together with a controversial weblog submit from CEO Sam Altman, the large rollout of Superior Voice Mode, 5GW information middle rumors, main workers shake-ups, and dramatic restructuring plans.

However the remainder of the AI world would not march to the identical beat, doing its personal factor and churning out new AI fashions and analysis by the minute. Here is a roundup of another notable AI information from the previous week.

Google Gemini updates

On Tuesday, Google introduced updates to its Gemini mannequin lineup, together with the discharge of two new production-ready fashions that iterate on previous releases: Gemini-1.5-Professional-002 and Gemini-1.5-Flash-002. The corporate reported enhancements in total high quality, with notable positive aspects in math, lengthy context dealing with, and imaginative and prescient duties. Google claims a 7 % improve in efficiency on the MMLU-Professional benchmark and a 20 % enchancment in math-related duties. However as you realize, if you happen to’ve been studying Ars Technica for some time, AI sometimes benchmarks aren’t as helpful as we want them to be.

Together with mannequin upgrades, Google launched substantial value reductions for Gemini 1.5 Professional, slicing enter token prices by 64 % and output token prices by 52 % for prompts underneath 128,000 tokens. As AI researcher Simon Willison famous on his weblog, “For comparability, GPT-4o is presently $5/[million tokens] enter and $15/m output and Claude 3.5 Sonnet is $3/m enter and $15/m output. Gemini 1.5 Professional was already the most affordable of the frontier fashions and now it is even cheaper.”

Google additionally elevated fee limits, with Gemini 1.5 Flash now supporting 2,000 requests per minute and Gemini 1.5 Professional dealing with 1,000 requests per minute. Google studies that the newest fashions provide twice the output pace and thrice decrease latency in comparison with earlier variations. These modifications could make it simpler and cheaper for builders to construct purposes with Gemini than earlier than.

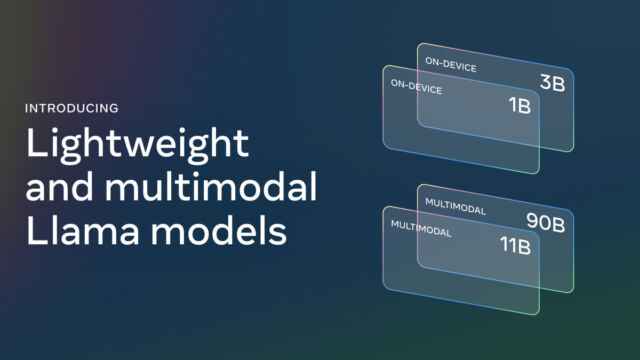

Meta launches Llama 3.2

On Wednesday, Meta introduced the discharge of Llama 3.2, a major replace to its open-weights AI mannequin lineup that we’ve coated extensively up to now. The brand new launch contains vision-capable massive language fashions (LLMs) in 11 billion and 90B parameter sizes, in addition to light-weight text-only fashions of 1B and 3B parameters designed for edge and cell gadgets. Meta claims the imaginative and prescient fashions are aggressive with main closed-source fashions on picture recognition and visible understanding duties, whereas the smaller fashions reportedly outperform similar-sized opponents on varied text-based duties.

Willison did some experiments with among the smaller 3.2 fashions and reported spectacular outcomes for the fashions’ dimension. AI researcher Ethan Mollick confirmed off working Llama 3.2 on his iPhone utilizing an app referred to as PocketPal.

Meta additionally launched the primary official “Llama Stack” distributions, created to simplify growth and deployment throughout totally different environments. As with earlier releases, Meta is making the fashions obtainable without spending a dime obtain, with license restrictions. The brand new fashions assist lengthy context home windows of as much as 128,000 tokens.

Google’s AlphaChip AI quickens chip design

On Thursday, Google DeepMind introduced what seems to be a major development in AI-driven digital chip design, AlphaChip. It started as a analysis venture in 2020 and is now a reinforcement studying technique for designing chip layouts. Google has reportedly used AlphaChip to create “superhuman chip layouts” within the final three generations of its Tensor Processing Models (TPUs), that are chips much like GPUs designed to speed up AI operations. Google claims AlphaChip can generate high-quality chip layouts in hours, in comparison with weeks or months of human effort. (Reportedly, Nvidia has additionally been utilizing AI to assist design its chips.)

Notably, Google additionally launched a pre-trained checkpoint of AlphaChip on GitHub, sharing the mannequin weights with the general public. The corporate reported that AlphaChip’s influence has already prolonged past Google, with chip design corporations like MediaTek adopting and constructing on the know-how for his or her chips. Based on Google, AlphaChip has sparked a brand new line of analysis in AI for chip design, doubtlessly optimizing each stage of the chip design cycle from pc structure to manufacturing.

That wasn’t all the pieces that occurred, however these are some main highlights. With the AI trade exhibiting no indicators of slowing down in the mean time, we’ll see how subsequent week goes.